In recent years, with the increasing demand for complex environmental interaction in unmanned systems, 3D scene understanding has gradually become a research focus. While traditional frameworks such as Multi-View Stereo (MVS) and Simultaneous Localization and Mapping (SLAM) can achieve geometric reconstruction, they exhibit limitations in high-level semantic perception and scene understanding. Recent advancements in Neural Radiance Fields (NeRF) and 3D Gaussian Splatting (3DGS) have introduced novel paradigms for 3D scene representation through differentiable frameworks, but the ultimate objective of scene understanding requires not only precise geometric and appearance reconstruction but also the integration of semantic features.

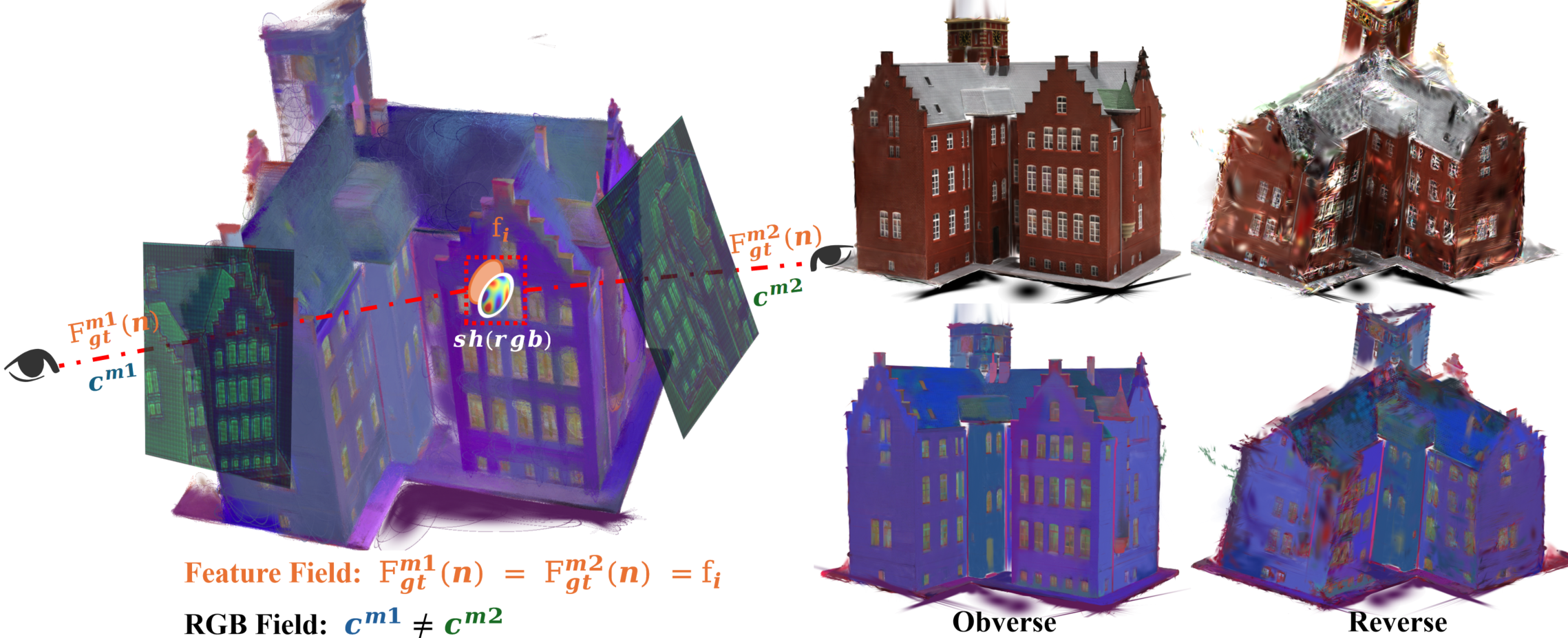

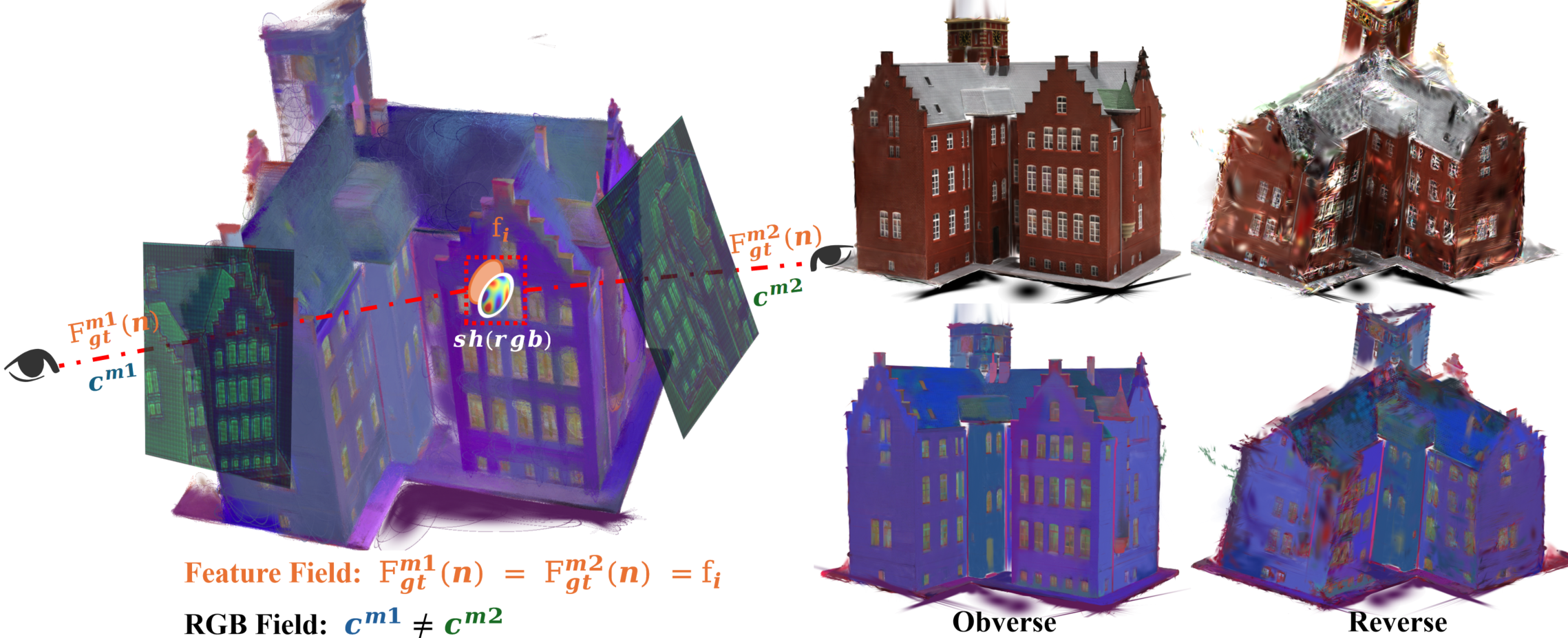

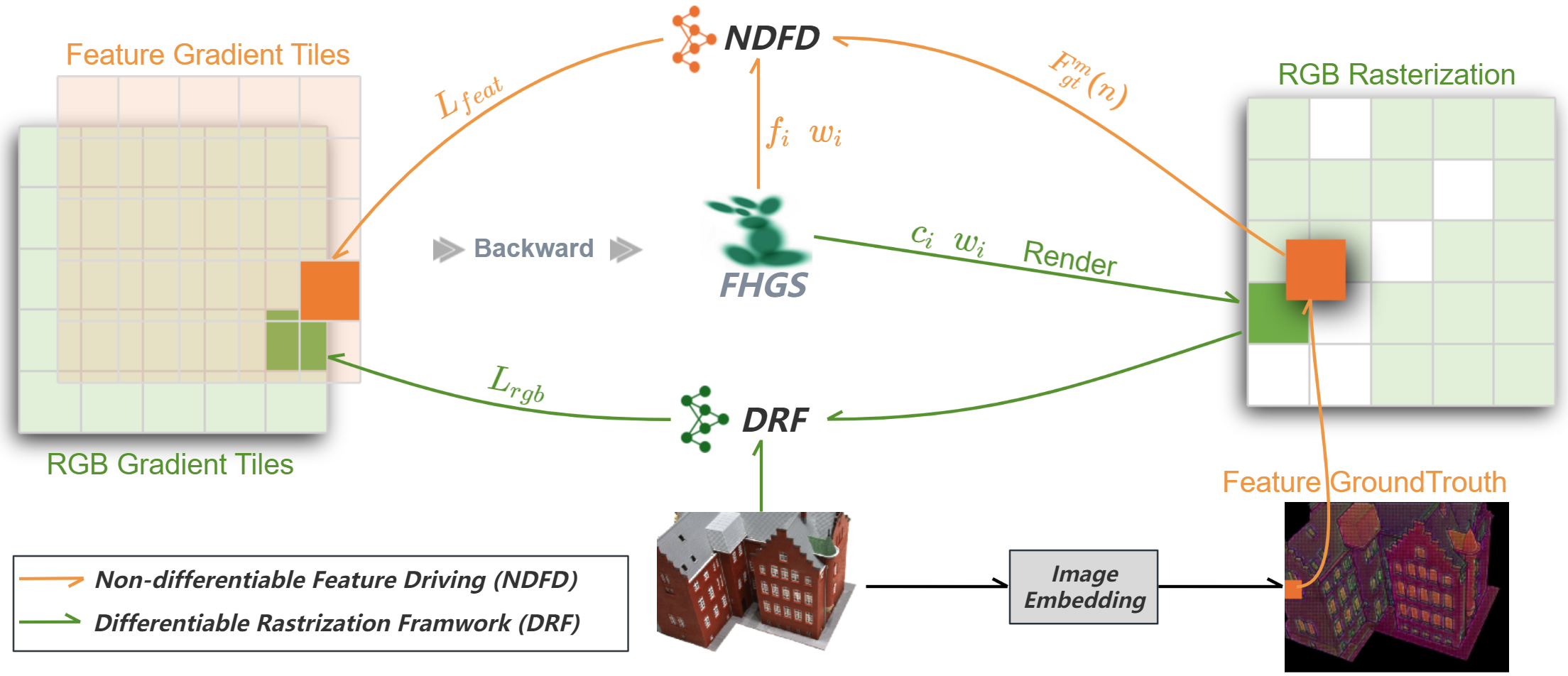

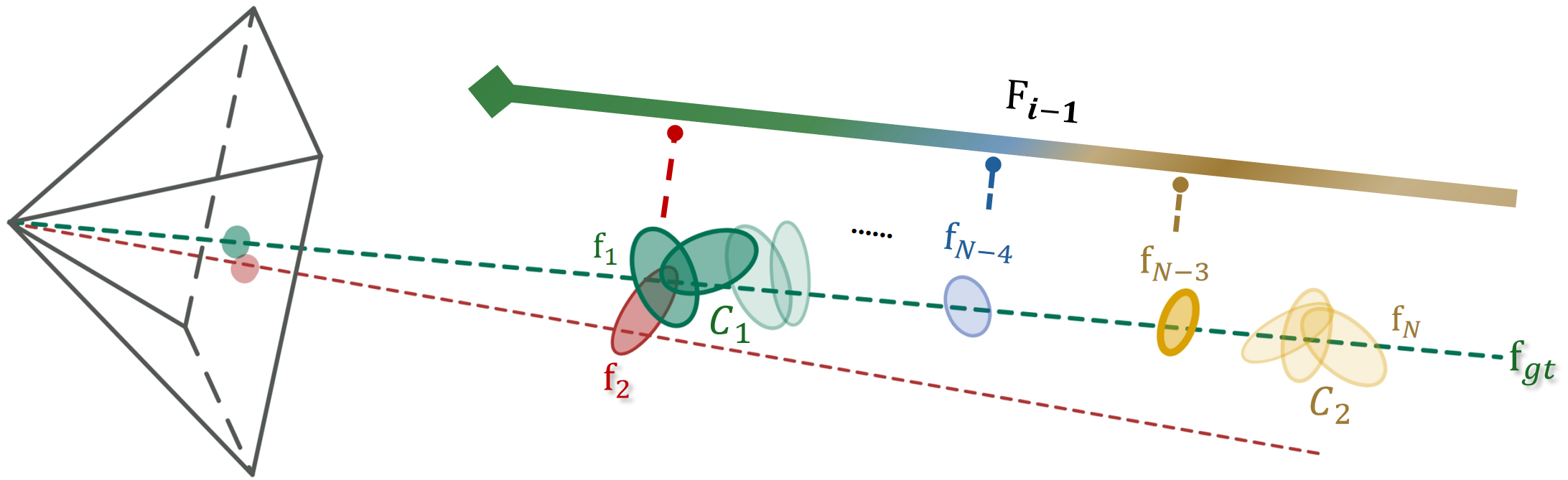

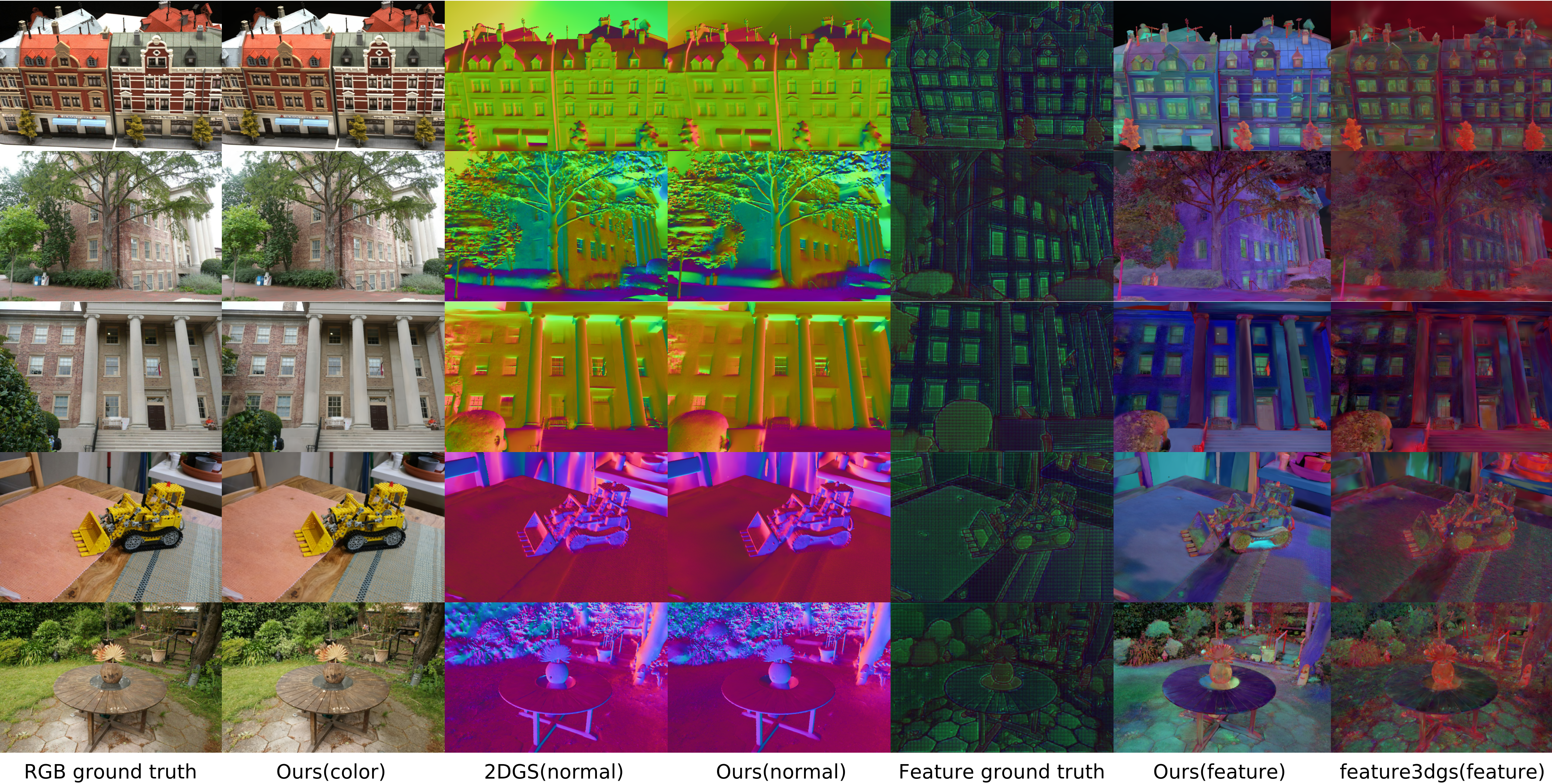

We propose FHGS (Feature-Homogenized Gaussian Splatting), a Feature Fusion Method based on GS framework. Leveraging a differentiable framework, FHGS establishes bidirectional associations between 2D features and 3D feature fields, enabling end-to-end optimized multi-view consistent feature fusion. By introducing physics-inspired principles from electric field modeling, we propose a dual-drive mechanism combining External Potential Field Driving and Internal Feature Clustering Driving, achieving global feature consistency optimization without modifying the intrinsic feature representation.

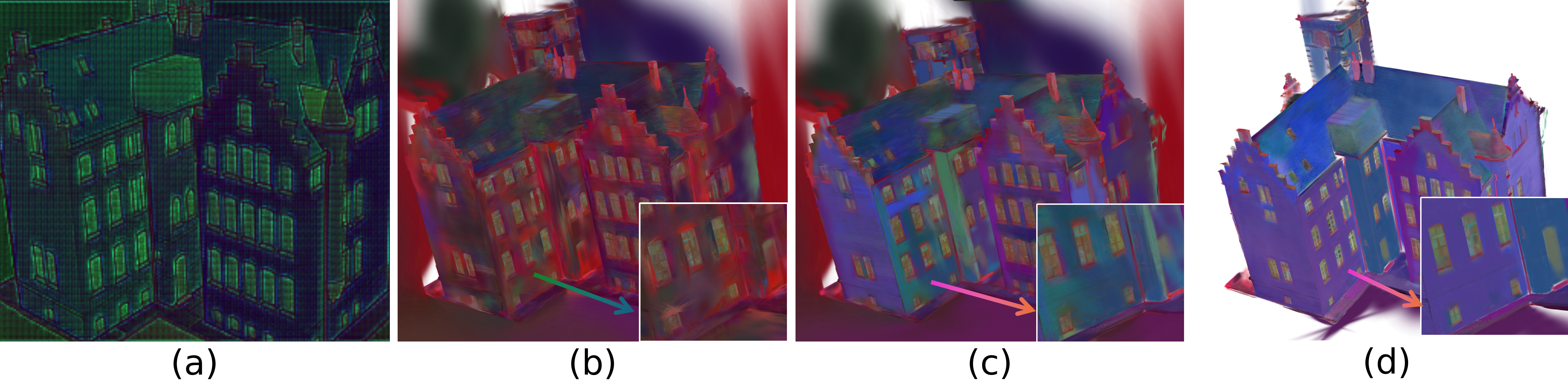

We pioneer the integration of non-differentiable features into the differentiable 3DGS framework, fundamentally resolving the inherent contradiction between the anisotropic nature of Gaussian primitives and the isotropic requirements of semantic features.

Drawing inspiration from electric field modeling, we design a joint optimization strategy combining external potential field driving and internal feature clustering driving, characterized by intuitive logic, computational efficiency, and strong interpretability.

@inproceedings{DuanFHGS2025,

title={FHGS: Feature-Homogenized Gaussian Splatting for 3D Scene Understanding with Multi-View Consistency},

author={Duan, Q. G. and Zhao, Benyun and Han, Mingqiao and Huang, Yijun and Chen, Ben M.},

booktitle={Advances in Neural Information Processing Systems},

year={2025}

}